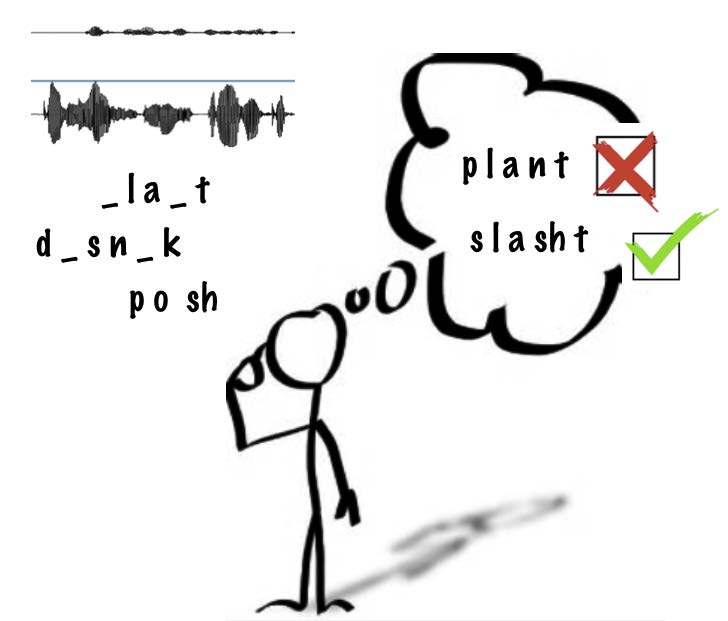

Listening to speech in noisy environments often leads to a situation where a listener perceives a different word or phrase from that actually spoken, producing what is known as a 'slip of the ear'. Dr María Luisa García Lecumberri leads a team in the UPV/EHU that has published the first large-scale corpus of robust speech confusions in English, consisting of more than 3000 words, and available online.

A new corpus of ‘slips of the ear' in English

The corpus follows on from an earlier corpus for Spanish listeners collected by a research group in the UPV/EHU

First publication date: 16/02/2017

Listening in quiet conditions is actually quite rare. Most of the time there is some kind of noise present, whether it is traffic, machinery, or simply other conversations. As native speakers with a rich experience of the language and the context in which speech occurs, we have a great capacity to reconstruct the part of the message obscured by noise. However, errors still occur at times. A group involving Dr García Lecumberri, Ikerbasque Research Professor Martin Cooke, along with researchers Dr Jon Barker and Dr Ricard Marxer of the University of Sheffield (UK) have identified 3,207 "consistent" confusions. The confusions are said to be consistent because, in every case, a significant number of listeners agree. This type of confusion is extremely valuable in the construction of models of speech perception, since any model capable of making the same error is very likely to be undergoing the same processes as those in human listeners.

The research study involved more than 300,000 individual stimulus presentations to 212 listeners in a range of different noise conditions. The resulting corpus is the only one of its kind for the English language and is available at http://spandh.dcs.shef.ac.uk/ECCC/. For each confusion, the corpus contains the waveforms of both the speech and the noise, a record of what a cohort of listeners heard, along with phonemic transcriptions. Distinct types of confusion appear with some frequency in the corpus. In the simplest cases what is clear is that the noise masks some parts of the word, forcing listeners to suggest a word that best fits the audible fragments (e.g., "wooden" -> "wood"; "pánico" -> "pan") or to substitute one sound for another ("ten" -> "pen"; "valla ->falla"). In other cases listeners appear to incorporate elements from the noise itself ("purse" -> "permitted"; "ciervo" -> "invierno"). Finally, the researchers find odd cases where there is little or no relation between the word produced and the confusion ("modern" -> "suggest"; "guardan -> pozo"). In these cases the way that the speech and noise signals interact is complex, and therefore interesting.

Dr García Lecumberri argues that "these studies help to reveal the mechanisms underlying speech perception, and the better we understand these processes, the more we can help at a technical and clinical level those listeners who suffer hearing and speech comprehension problems". The group has also elicited a similar corpus for the Spanish language that can be accessed from the same web page. "There are similarities and differences between Spanish and English confusions: Spanish is a highly-inflected language, leading to more confusions in word-final position; English has a larger number of monosyllabic words and a richer set of word-final consonants, leading to more substitution-type errors in this position" she adds. However, both languages show a similar pattern of confusion types in noise, with some sounds surviving better than others.

Additional information

Dr María Luisa García Lecumberri is Senior Lecturer in English Phonetics in the Faculty of Letters at the University of the Basque Country (Vitoria) and member of the Language and Speech research group, to which Ikerbasque Research Professor Dr Martin Cooke also belongs. Dr Jon Barker is Reader in Computer Science in the Speech and Hearing research group at the University of Sheffield, where Dr Ricard Marxer works as a research fellow. Corpus collection was funded by the EU Framework 7 Marie Curie project PEOPLE-2011-290000 "Inspire: Investigating Speech Processing in Realistic Environments".

Bibliographic reference

- A corpus of noise-induced word misperceptions for English

- The Journal of the Acoustical Society of America Volume 140, Issue 5

- DOI: 10.1121/1.4967185